From Text Prediction to Conscious Machines: Could GPT Models Become AGIs ?

April 4, 2023

Leave a comment

Image via http://www.vpnsrus.com

Picture this : a world where AI is not just a “chatbot” you interact with , but an entity that is responsible for decision making, scientific research and even guiding humanity.

Welcome to the world of AGIs, Artificial General Intelligence, which refers to AI systems as smart as humans, or greater. The quest for AGI has been a long-standing goal of the AI community, and recent advances in generative models have led to an increasing interest in their potential for achieving AGI.

Generative Models

A generative model is a type of machine learning model that is able to generate data samples, based on the large volumes of data that it is trained on (mostly text but now increasing images as well). They can learn the patterns and structures of language from a large corpus of text and then generate new text that is coherent and follows the same patterns.

The breakthrough here was to use “Transformers” – a type of neural network introduced in 2017.

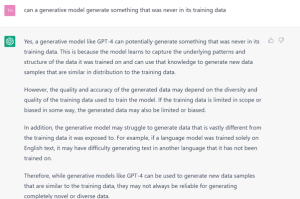

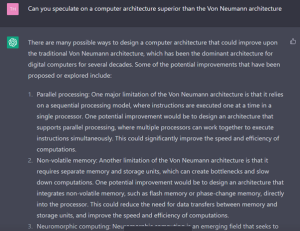

Now, more interestingly ……I asked GPT whether a Generative Model could generate something that was never in its training data ( important as we move to AGIs ). Here was its response:

The answer is that, by identifying patterns and data structure, it may be able to, but will struggle to generate anything vastly different from the training data, and nothing “completely novel”. This is where we are currently.

A mirror into your civilization

If I had to describe GPT in an exciting way :

Currently, Generative Models are a mirror of your civilization at a point in time, an automated and efficient record and reflection of everything you have done ( once trained on everything )

Given that humans have to generate science, art and culture over many years, and then train the models on the entirety of this, there is a huge dependency – for these Generative models to have any value, the content on which the model is trained needs to be created first.

These models may seem “smarter” than a human, but that is because they can access information generated by human culture and civilization instantly, whereas the humans, whose creativity came up with everything in the first place, cannot.

The Two Planets example

To further illustrate, I have come up with the “two planets” example.

In this scenario, imagine a duplicate civilization that has evolved to the same level as the Earth-Human civilization, say on Proxima Centauri. They would potentially have similar cultural achievements, and the same level of technology, although their language, appearance, etc could be different. At the same time as Earth, they develop Generative models and their version of GPT4.

If we queried the Earth GPT4 model about anything on Proxima Centauri, it would know nothing...

Of course, the reciprocal would apply as well. Even a more advanced model, a GPT 5 or 6, would have the same limitations, as it was not trained on any data from that planet. Would you still consider it “intelligence” ?

How useful would GPT be in this scenario? Well, if the aliens came here, they could use the Earth GPT4 to learn all about our planet, culture and achievements, assuming they quickly learned one of our languages that the GPT model is also familiar with. However, what was once being spoken of as an “AGI” may not be considered as such in this example.

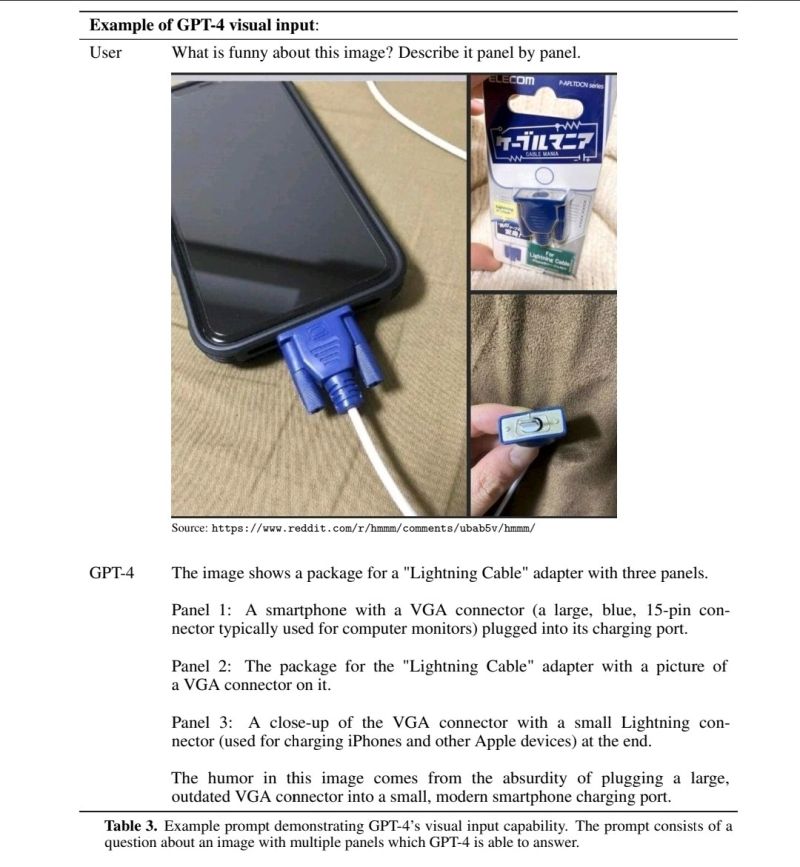

What would be truly impressive is if the Earth GPT4 could understand images or pass an IQ test from the hypothetical Proxima Centauri civilization….

Innate Intelligence

It is still amazing to me that GPT4 has an understanding of patterns, relationships in images, and the ability to pass a simple IQ test. Yes, it was trained by its creators to do this, based on data of mankind’s history, but once trained it has this ability.

This brings us to the definition of Intelligence itself, and the concept of innate intelligence.

“Every living being has some level of innate intelligence”

While factors such as education, socioeconomic status, and cultural experiences can impact cognitive development and, in turn, influence IQ test performance in humans, these are not the only factors that influence intelligence. Genetics, neurological factors, and individual differences in learning capability also play a role. Therefore, there is an innate intelligence in every living being that plays a large part in the resulting visible intelligence demonstrated in the real world.

How do we create an artificial intelligence with some level of innate intelligence ?

Remember, if a human baby grows up in a culture different to his/her parents, learning a different language, the child still manages to learn quickly.

Consider this – if we go back to the Two Planets example, and we are confident that while the Earth GPT4 will have no knowledge of culture, language, events etc from another civilization, but that it WILL manage to :

- Perform mathematical calculations that are constant in the universe

- Understand basic patterns that are constant in the universe

- Learn the basic structures of language that may be common in the universe

- Thus, ….have the ability to potentially learn from ANOTHER civilization and their data

We then approach something very exciting…… we could then argue that in creating these models which, admittedly had to be trained on all our data to start with, we are taking the first steps into creating something with a small amount of innate intelligence. And each subsequent model would then build on the previous in terms of capability, until….well, would iteration 7 or 8 be an AGI ?

Are they AGIs ?

At this point we need to be clear on what our definition of an AGI is, as we are finally moving in the direction closer to creating one. I believe that our definition has become muddied.

Does an AGI and the Singularity simply refer to any intelligence smarter than humans ?

If we go back to the 1993 definition of the Singularity, as per the book “The Coming Technological Singularity” by Vernor Vinge, he spoke of “computers with super-human intelligence”. I could argue that GPT4 already is smarter than any human in terms of recalling knowledge, although it would be less capable in creativity, understanding and emotional intelligence.

He also talks about human civilization evolving to merge with this super intelligence. This hasn’t happened but brain interfaces have been built already. A brain interface into Chat GPT4 that would allow a human to call up all our civilizations knowledge instantly, turning him/her into a “super human”, is actually possible with today’s technology. It could be argued then that we have met the criterion already for the singularity by the 1993 definition……

The Singularity

If we move to futurist Ray Kurzweils definition of the Singularity, he spoke of “…when technological progress will accelerate so rapidly that it will lead to profound changes in human civilization…”.

Here, the year 2023 will certainly go down as the start of this change. The year 2013, due to the emergence of GPT3 and then GPT4, is a watershed year in technological history, like the launch of the PC or the internet.

Already there are conversations that I can only have with GPT4 that I cannot have with anyone else. The reason is that the humans around my may now be knowledgeable on particular topics, so I turn to GPT4. I sometimes do try to argue with it and present my opinion, and it responds with a counter argument.

By our previous definition of what an AGI could be, it can be argued that we have already achieved it or are very close with GPT4.

We are now certainly on the road to AGI, but we now have to clearly define a roadmap for it. This isn’t binary anymore, as in something is either an AGI or not. More importantly, the fear mongering around AGIs will certainly not apply to all levels of AGI, once we clearly define these levels.

This is particularly important, as recently people such as Elon Musk have publicly called for a “pause” in the development of AGIs as they could be dangerous. While this is correct, this will also rob humanity of the great benefits that AIs will bring to society.

Surely if we create a roadmap for AGIs and identify which level would be dangerous and which will not, we could then proceed with the early levels while using more caution on the more advanced levels ?

The AGI Roadmap

Below is a potential roadmap for AGIs with clearly defined stages.

Level 1: Intelligent Machines – Intelligent machines can perform specific tasks at human-level or better, such as playing chess or diagnosing diseases. They can quickly access the total corpus of humanity’s scientific and cultural achievements and answer questions. Are we here already ?

Level 2: Adaptive Minds – AGIs that can learn and adapt to new situations, improving their performance over time through experience and feedback. These would be similar to GPT4 but continue learning quickly post training.

Level 3: Creative Geniuses – AGIs capable of generating original and valuable ideas, whether in science, art, or business. These AGIs build on the scientific and cultural achievements of humans. They start giving us different perspectives on science and the universe.

Level 4: Empathic Companions – AGIs that can understand and respond to human emotions and needs, becoming trusted companions and helpers in daily life. This is the start of “emotion” in these intelligent models, however by this time they may be more than just models but start replicating the brain in electronic form.

Level 5: Conscious Thinkers – AGIs that have subjective experiences, a sense of self, and the ability to reason about their own thoughts and feelings. This is where AGIs could get really unpredictable and potentially dangerous.

Level 6: Universal Minds – AGIs that vastly surpass human intelligence in every aspect, with capabilities that we cannot fully define yet with our limited knowledge. These AGIs are what I imagined years ago, AGIs that could improve on our civilizations limitations, and derive the most efficient and advanced designs for just about anything based on the base principles of physics ( ie. Operating at the highest level of knowledge in the universe).

As you can see, levels 1-3 may not pose much of a physical threat to humanity, while offering numerous benefits to society, therefore we could make an argument for continuing to develop this capability.

Levels 4-6 could pose a significant threat to humanity. It is my view that any work on a level 4-6 AGI should be performed on a space station on Moon base, to limit potential destruction on Earth. It is debatable whether the human civilization would be able to create a Level 6 AGI, even after 1000 years…

Universal Minds

Over the last few decades, I have been fascinated with the concept of an Advanced AGI, once that is more advanced than humans and that could thus rapidly expand our technological capability if we utilize it properly.

Here is an old blog post on my Blog from 2007 where I was speculating on the Singularity being near, after following people like Kurzweil.

What I always imagined was a “super intelligence” that understood the universe from base principles much better than we did, even if it was something we created. Imagine an intelligence that, once it gets to a certain point, facilitates its own growth exponentially. It would perform its own research and learning.

It would be logical that such an intelligence plays a role in the research function of humanity going forward.

It could take the knowledge given to it and develop scientific theory far more effectively than humans. Already today, for example, we see a lot of data used in Reinforcement Learning being simulated and generated by AI itself. And if the total data we have is limiting, we could then ask it to design better data collection tools for us, ie. Better telescopes, spacecraft, and quantum devices.

A simple example that I would use would be the computing infrastructure that we use, on which everything else is built.

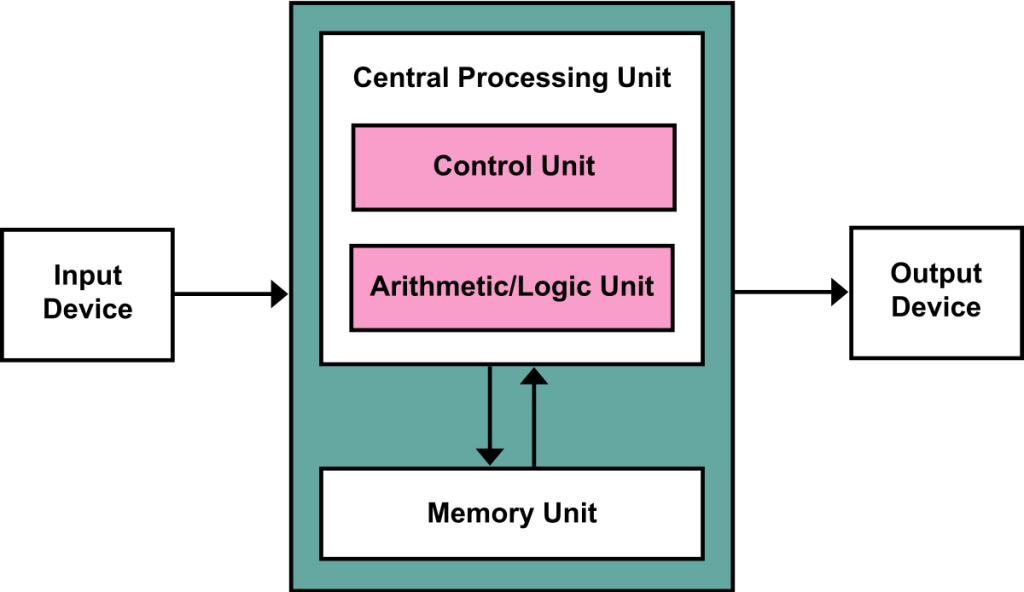

Most computers today use what’s known as the “Von Neumann” architecture, which is shown below. The main drawback of this architecture is that data has to be constantly moved between CPU and memory, which causes latency.

On top of this we typically use the x86 CPU, then Operating systems like Windows or Linux, then applications written in programming languages like C++.

Imagine if we could engineer an optimal, efficient computing architecture, from base principles, with orders of magnitude improvements at base architecture level, CPU level, OS level and application software level. This would be a difficult task for humans to undertake today, not just in terms of the actual technical design, but also in building it, and to collaborate on the next layer above, and to adopt the technology.

With a “super-intelligent” Universal AI, it would have the power to generate every layer at once.

It would also give us design schematics for the factories to build the new components, that too in the most quickest and efficient way.

Summary

Hold on to your seats ! …..

Some GPT models have already shown an impressive ability to pass IQ tests and learn basic mathematics, hinting at the potential for developing a level of intelligence that goes beyond simple text generation. Now that we have an example of a roadmap for AGIs ( above ), we can certainly see how GPT is the start of the early phases in this roadmap, although more technical breakthroughs will be needed to eventually get to the later stages.

So buckle up and get ready – We don’t know where the end of this road is and where it will take us, but with GPT models now mainstream, we now know that as of 2023 we are at least on the road itself, travelling forward.

Appendix

Below is a great video that I found explaining what Transformers are:

Categories: AI

AI, Artificial Intelligence, Machine Learning

Delivering AI in Banking

June 17, 2022

Leave a comment

I recently interviewed Paul Morley, Data Exec at NEDBANK, on implementing Big Data and AI in Banking. Hope you enjoy it !

Categories: AI

Ethical AI

September 8, 2020

Leave a comment

As we enter the 2020s, it is interesting to look back at how life has changed over the last decade. Compared to your life in 2010, most of you reading this probably use a lot more social media, watch more streaming video, do more shopping online and, in general, are “more digital”.

Of course, this is as a result of the continued development in connectivity (4G becoming prominent, with 5G on the horizon), the capability of mobile devices, and lastly, the quiet and transparent adoption of machine learning, a form of artificial intelligence, in the services that you consume. When you shop online, for example, you are getting AI powered recommendations that make your shopping experience more pleasant and relevant. And over the last decade, many of you will have interacted with a “chatbot”, a form of AI, which hopefully answered a query of yours or helped you in some way.

The difference of a decade is basically the chatbot not seeming that amazing anymore…….

The term “Artificial Intelligence” was coined in the 1950s, by John McCarthy, a now famous computer scientist. When you think of artificial intelligence, you may think of HAL 9000, or the Terminator, or some other representation of it from popular culture. It wouldn’t be your fault if you did, however, as, ever since the concept came about, it was an easy fit for Sci-Fi movies, especially ones that made AI the bad guy. If John McCarthy and his colleagues simply termed the area of study “automation”, or something equally less imaginative, we probably wouldn’t have this association today.

An AI like HAL 9000, the sentient computer from the movie 2001, would be considered an “Artificial General Intelligence”, one that has a general knowledge across many topics, much like a human, and can bring all of that together to almost “think”. This is opposed to a “Narrow AI” which would have a narrow specialization – an example would be building a regression model to predict the probability of diabetes in a patient, given a few other key health and descriptive indicators.

Technically you could consider this automated mathematics and stats, as the algorithms have been known for more than 100 years….

…but the progress is building now because the data and the computing power are more available and affordable.

The AI of today is nowhere close to being an “AGI” though. Instead, the AI projects that we see being worked on are most likely “Narrow AI” Machine Learning projects. That means that we’re safe from any Terminator (for now).

However, if we don’t consider ethics as part of the AI creation and development process, even for Narrow AI, we could still unleash tremendous harm on society, even sometimes without realizing it.

Tweet

AI Adoption

The broad spectrum of ethical impact from the actions of artificial intelligence range from a simple ML regression model not wanting to recommend loans to a particular demographic, all the way to a AGI being given the power to do tremendous physical damage, either intentionally or unintentionally.

Further pressure on the need for the ethics debate is the pace of AI adoption, that is, over the last decade, it went very quickly from being spoken of to being real. Consider the advancements in conversational speech, image recognition and deep learning that would have been science fiction in 2010 ?

In late 2019, Microsoft data scientist Buck Woody visited South Africa, to do a few events here. I asked Buck, while doing a video interview with him, on where AI adoption was going next. His answer was- “it will become transparent”, meaning that very soon, it will feel normal to have machine learning everywhere, all processes in business or our personal life having predictive capability and optimization, not just automation. This is very similar to what happened with microcontrollers and software in the late 20th Century. We expected everything from our cars to appliances to have onboard computers or at least logic circuits, and the improved experience from the device being due to decisions being made in some form of software. The improvement very quickly became the norm. Expect a similar on-ramp of AI, as more pieces of our lives become “AI powered”.

Building for Ethics

Given the expected adoption. experts are now saying that we should build AI more cautiously, and it is extremely important that we build with ethics in mind, from day one.

When we had the microcomputer revolution in the 1970s , was ethics considered ? Perhaps not, there was nothing to link ethics to processing of data, even though the software systems built on top of those needed to consider ethics. When we had the internet revolution of the 1990s , and the mobile phone revolution of the 2000s, the question wasn’t explicitly being asked either. But perhaps it should have been, given the issues that we now see around privacy and social media influence on major events.

The public trust in AI, if ever lost, will be very difficult to regain. With this in mind, Satya Nadella, CEO of Microsoft, proposed in June 2016, five principles to guide AI design. There are :

- A.I must be designed to Assist Humanity

- AI must maximize efficiencies without destroying the dignity of people

- AI must be transparent

- Have accountability so that humans can undo unintended harm

- Have intelligent privacy and data protection built in, to guard against bias.

So what are the dangers of AI that we need to protect society against ? As you might have imagined, let’s not focus on “doomsday” scenarios with AGI’s running amok – firstly, we are nowhere near that possibility in 2020. Secondly, and more importantly, there is a tremendous amount of harm that can be done to society just with the incorrect implementation of “narrow” AI’s, as explored in the following sections.

Bias

The most prominent danger of AI, that is highlighted fairly often, is the issue of bias, the danger that AI systems may not treat everyone in a fair and balanced manner. For example, when AI systems provide guidance on medical treatment, loan applications or employment, they should make the same recommendations for everyone with similar symptoms, financial circumstances or professional qualifications.

Theoretically, since AI systems take data and look for actual patterns and facts, the outputs should be perfectly accurate. However, the problem is that todays AI systems are being designed by humans, and there are two ways bias can creep in :

- The inherent and perhaps unconscious bias of the designer

- Flaws and bias in the data used to create and train models.

Lets use the example of a system designed to help HR recruit software developers. In the current world, we may have a situation that most software developers are male, even though we would like to change that to a more equal distribution. An AI system, however, may be trained on current data and include bias towards males in its recommendations.

Ethical AI requires that anyone developing AI systems be aware of the above issues, and take steps to ensure that both accurate, neutral data be used as inputs, as well as using methods to eliminate other bias in the creation process. Techniques like peer reviews and statistical data assessments will be needed. Be aware that the above isn’t the end of it, though. It has been found in multiple cases that AI sometimes allows some form of bias to creep back in over time, so post deployment the system will have to be continuously monitored. This is where “MLOps” comes in, an emerging field that is focused on the lifecycle of model development and usage, and in particular, aspects of machine learning model deployment. MLOps will have to include bias detection as part of model degradation analysis, to deliver ethical AI.

Safety and Reliability

While the majority of AI systems today involve analysing data and making a prediction or defining a trend, this will change rapidly in the early years of the decade. As the outputs of AI are increasingly used to effect the physical world, a focus on safety and reliability becomes critical. This is similar to the journey the software world went through a few decades back.

A clear example of this would be autonomous driving systems. In recent years, one manufacturer of cars has offered an AI powered autonomous driving option on their vehicles, however a spate of accidents brought the technology into question. This is where the ethical design of AI is non-debatable – even one more death in an accident ( if the system did have a flaw ) is unacceptable. From an ethics perspective the questions are – was the system ready for release ? Should the system be pulled from production even if the accident rate is low ( given the risk ) ? Sometimes the ethical questions could come post design / engineering and after production.

Privacy

If there’s a hot topic in the tech world at the moment – it would be privacy. In recent years laws have been put in place globally to ensure that the personal information of individuals is protected and not collected unscrupulously. While this is preferable, it is something of a double edged sword for the world of AI – as you know, AI needs data, and lots of it, to be as accurate and useful as possible.

A particular quandary would be if society needed to collect private information from individuals, in order to deliver an AI system that serves society in some form – where would the world draw the line ? This is exactly what happened early in 2020 with the COVID-19 pandemic. In order to measure whether social distancing, critical to stopping the spread of the virus, was indeed taking place at an acceptable rate, various social distancing applications entered the market. Of course, these would collect a lot of information on an individuals cellphone, including precise location information and history. Many chose not to install these applications, also known as contact-tracing apps, however governments, desperate for this information to see if social distancing measures were in fact working, were conflicted about them.

If the issues around data privacy aren’t sorted out in the current timeframe, the danger is that the public trust would be lost, and both individuals and organisations may not be inclined to share data that is needed to build the useful AI systems of the future.

Even if there is future agreement on the collection of data for use in AI, ethical AI will demand frameworks in place on how that data is used, as well as transparency. There are already techniques being used by companies like Microsoft to protect the privacy of individuals where their data is being used, such as differential privacy, homomorphic encryption, and many others.

Transparency

As AI systems become more common place and make increasingly important decisions that impact society, it is critical that we understand how those decisions are made. The current consensus is that an AI system should provide clarity on all aspects of its creation, from the data used in training through to the algorithms itself.

To simplify, the question that should always be asked is – “Do I really understand why this particular model is predicting the way it is?”. Even experts can be fooled by a model with an inbuilt flaw.

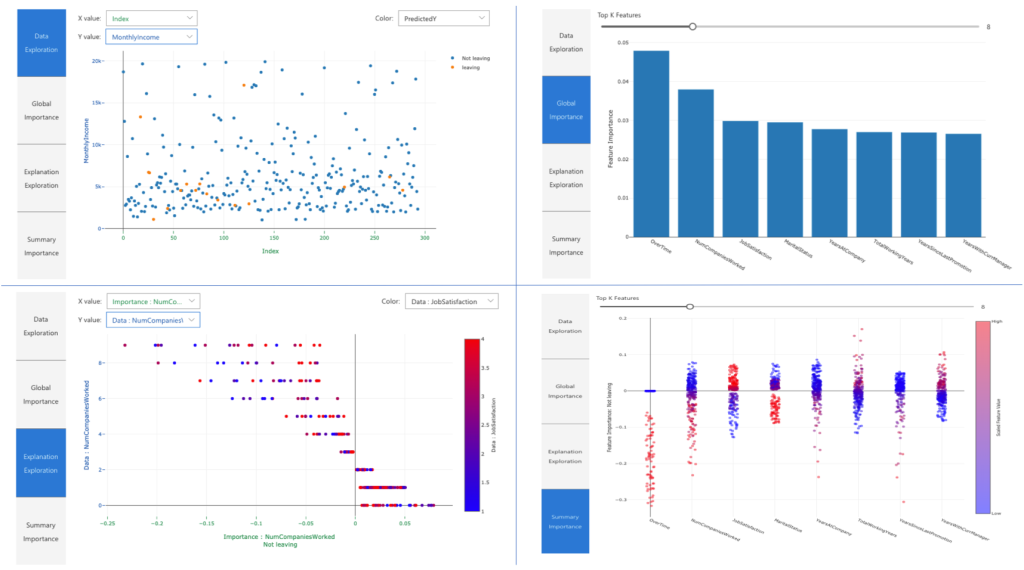

There have been developments in this space however. One such is the concept of “model interpretability”, which is now being serviced via frameworks like InterpretML ( which is being referenced by most ML platforms including Azure ML). Model Interpretability allows data scientists to explain their models to stakeholders, confirm regulatory compliance and allow further fine tuning and debugging.

Figure 1. Model Interpretability in Azure ML

There is also the Fairlearn toolkit, being referenced by tools like Azure ML now, which would allow you determine the overall fairness of a model. This is quite useful. To revisit the examples earlier, a model for granting loans or for hiring could be referenced for fairness across gender or other categories. This isn’t really optional anymore – in many industries, regulators are now asking to see proof that these best practices were followed in building these models.

It is also important that we show accountability – the people who design and deploy AI systems must be accountable for how their systems operate. While AI vendors can provide guidance on model transparency, accountability needs to come from within – organizations will need to create internal mechanisms to ensure this accountability.

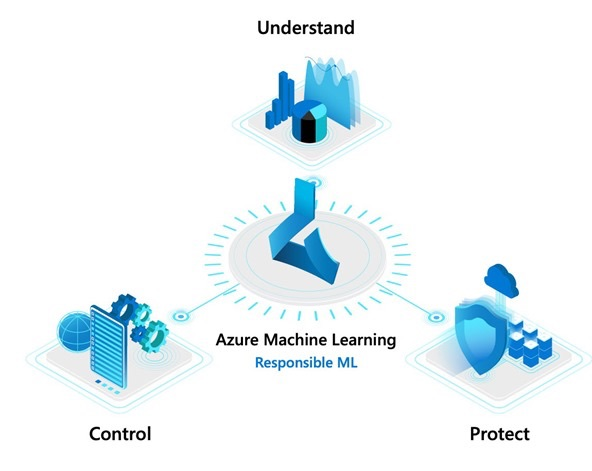

An example of how vendors are trying to bring all of this together is the Responsible ML initiative. The goal here is to empower data scientists and developers to understand ML models, protect people and their data, and control the end-to-end ML process.

Figure 2. Responsible ML

This brings together the technologies mentioned like InterpretML to create a framework that can help organizations.

Lastly, we have to consider ethics outside of model development, but in model usage. Those who use AI systems should be transparent about when, why, and how they choose to deploy these systems. Consider the impact of AI to the jobs market – it is clear at this stage that over the next decade increased automation, aided by artificial intelligence technologies, will impact the job market. We are already starting to see visible examples of this – in 2019 a famous restaurant chain implemented a system where a customer could walk into a store and place an order at an automated kiosk. Technologies like speech recognition aid automation making such scenarios possible. The ethics decisions will be – should a company implement such ai-assisted automation at the cost of jobs, especially where the economic benefits aren’t clear ?

Luckily, it is also expected that AI will create jobs as well. Some examples include data scientists and robotic engineers, and perhaps roles that we cannot imagine yet. In fact, AI will probably reduce the number of low-value, repetitive, and, in many cases, dangerous tasks. This will provide the opportunities for millions of workers to do more productive and satisfying work, higher up on the value chain, as long as governments and institutions invest in their workers education and training.

Summary

We live in exciting times, as this generation will be the first to witness AI will playing a greater role in our daily lives. As mentioned, technologies like speech and face recognition were sci-fi ten years ago, and its exciting to imagine things, that seem sci-fi today, which will be real and common place by 2030. Imagine working remotely via a Hololens device, meeting with people all around the world, with your speech being translated instantly for people on the other side, while your AI assistant tracks the meeting progress in the background and send outs notes. This is the tip of the iceberg, and yet, this reality will be threatened if the trust in AI is lost – all the issues like privacy and security need to be proven to have been sorted out before large scale adoption like this happens.

There is still enough time for this to be achieved though, with enough cooperation between organizations and governments.

Going forward, AI will only become more complex. Just as with the journey with software, if design patterns, standards, and methodologies enabling development aren’t laid out early on, we may see many failed projects, which would stall innovation, however the risks of not considering ethics as well will be more catastrophic. Everyone involved in AI in any way has a role to play, to make sure that this exciting frontier is implemented with ethics in mind, so that we reap as many of the benefits as possible as a society.

Categories: AI

AI, Machine Learning

The convergence of AI and IOT

November 1, 2019

Leave a comment

With the emergence of ever-cheaper and robust hardware, 5G connectivity around the corner, and most importantly, a growing list of real world use cases, we can all agree that IOT projects are here to stay. But is that where it ends ?

Is the end goal of having interconnected devices simply because it sounds like something that could be useful to have, perhaps to monitor some sort of reading in a production environment , or get timely updates in a supply chain process ?

IOT will play a much more important role in our future , primarily for the following reasons. You see, not only do I feel that IOT projects are ultimately most valuable as Big Data projects ( we are essentially collecting large amounts of data from these IOT devices after all ), but I will also state that ultimately, all IOT projects will apply Machine Learning and AI to the data collected in order to truly move the game forward.

Now, this may seem like a massive undertaking, as getting IOT deployed correctly can be challenging enough. But perhaps we need to separate the “Edge” piece from the “Cloud” piece ( and to get this working correctly , the cloud is your friend ).

Postcards from the Edge

In world where most back-end processing will happen in the cloud, the piece of a solution that’s closest to the action , and in the case of IOT , where the actual devices sit , would be called the “Edge”. A move to bring standards to IOT hardware means common interfaces, common data points and, very importantly, an upgrade in security layers around these devices. We wouldn’t want millions of smart devices deployed around us to be hacked now, would we?

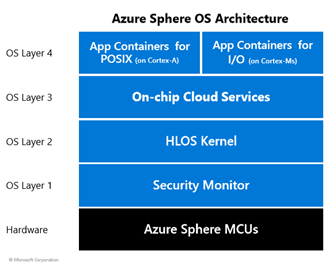

An example of the above is Azure Sphere – a definition of a set of standards at the OS layer for security, maintainability and operations of IOT devices (across different actual hardware platforms).

Onward into the cloud

What then happens to all the data being collected by Edge devices? Is this simply a one-way process? Surely it cannot be that simple, wasn’t this supposed to be more complex?

At this point, it seems that a few common patterns are emerging for typical IOT projects :

- We can utilize established Big Data practices / architectures in the cloud to typically treat the collection of data from Edge devices as a Cloud Big Data project, once the data is in the cloud , in order to simplify the overall architecture and design, and to facilitate an increased pace of delivery. This will work for many use cases, although certainly not all.

- The cloud born technologies can also assist with the process aspect, as things such as real-time data streaming was difficult to implement previously. High frequency data collection , simultaneously, from multiple sources ( sometimes thousands of sources ) is a problem that generally has been solved with cloud technologies, even if still tricky to implement.

An example of a cloud based architecture for Big Data that could be used in “back end” of an IOT solution is as follows :

As mentioned though, this typically isn’t a one way process. Once we have visibility about what’s going on at the Edge , thanks to the data being collected by IOT devices, what then ? We then enter the world of “course corrections” in manufacturing environments, maintenance alerts in service environments, and critical alarms where immediate actions need to be taken. This is the ideal time then, to talk about “Feedback Loops”.

The beauty of considering feedback loops when designing processes is that they can be applied to many scenarios. In our “Course correction” example, which would be common in a manufacturing environment, we collect data from IOT sensors around the manufacturing environment. Once all the big data processing has happened in the cloud, perhaps in near real time, a view can be gained as to whether the process is on track. An automated process can then be kicked off to make adjustments where needed. This would be fairly common in todays manufacturing world.

However, consider a scenario where IOT + Big Data has been deployed to an end customer. An increasingly common example would be a tracking device in a vehicle. We’ve had for many years a scenario where if the car is stolen, the feedback loop triggers and contacts law enforcement to search for the vehicle. However, using cloud based real-time analytics, providers can now analyse driving styles, leading to a new revenue stream (insurance), as well as provide location-based services to customers. The latter is a perfect example of how an IOT + Big Data solution can really bring about digital transformation.

But there’s more……

That isn’t where it ends though. While traditional reporting can tell us :

- What has happened in the past

- What is happening in the present

… you could then apply Machine Learning to the data being collected to try to predict what will happen in the future. With the machine learning technology available today, there are some impressive scenarios that this has unlocked. Not surprisingly, one of the fastest growing IOT use cases today is the “predictive maintenance” scenario. In this solution, data readings from IOT sensors placed in production equipment, are processed against a trained machine learning model, to predict maintenance requirements and even failure rates. This has not only changed the user experience and reliability of devices and machinery, but changed the business models of suppliers as well.

A good example of the above , the Thyssen-Krupp Predictive Maintenance Reference video here : https://www.youtube.com/watch?v=wHHaqgONRSQ

As more data is collected from more and more IOT devices, even more accurate machine learning models will be created, utilizing the scale and power of the cloud to process these models even faster. The “on-demand” nature of the cloud allows even small organizations to rent this horsepower to do this efficiently, whereas in the old on-premises only world, such a company may never have invested into the horsepower to do so. This means that the barrier to entry for all sectors and industries to implement such IOT+AI solutions has been lowered, with local availability of cloud and ever decreasing IOT device costs.

All of the above is really exciting, and no doubt we will see even more amazing use cases emerge in the near future. Before we wrap up, however, let me add one more addition to this entire picture.

FPGA’s

While processing machine learning models in the cloud allows the predictive capability in an IOT+AI solution, there will be use cases where the model will need to be updated and processed even quicker. What if a smaller version of the model could be processed right at the Edge ? Remember that Neural Networks require tremendous amounts of processing power, and todays machine learning platforms have relied on shader technology ( not traditional CPUs ) to process these in the cloud. A breakthrough happening right now is the emergence of FPGA (Field Programable Gate Array ) based IOT hardware, which will be able to process machine learning models right at the edge. This scenario will lead to more refined use cases for IOT+AI solutions.

In summary, the move towards standards for IOT devices, the greater availability of cloud based services, as well as developments such as FPGA, are all converging to change the business landscape rapidly. If you’re not thinking about how IOT+AI could improve and even redefine your organization, I would imagine that your competitor certainly is doing so right now.

Categories: AI, Azure, Computers and Internet

AI, Artificial Intelligence, Azure, IOT, Machine Learning, Sphere

SQL 2019 , Polybase and more …..with Buck Woody !

September 12, 2019

Leave a comment

Buck Woody is well known in the field of Data Science. He joined us this week in Johannesburg to promote SQL 2019 , and do some training events with us (including SQL Saturday !! ).

I chat to Buck about what excites him about R in SQL , PolyBase, and the future of Data Science !

Categories: SQL Server

Learn about Consortium Blockchain solutions

July 12, 2019

Leave a comment

I interview Fabian van der Merwe, and we talk about getting started with consortium Blockchain solutions, in Azure, which probably has the most comprehensive Blockchain stack.

Learn more here : Azure Blockchain Overview

Categories: Blockchain, Tech

Power BI – Maps not displaying the correct location.

April 17, 2024

Leave a comment

Here’s a common issue with potentially an easy fix (depending on your geography).

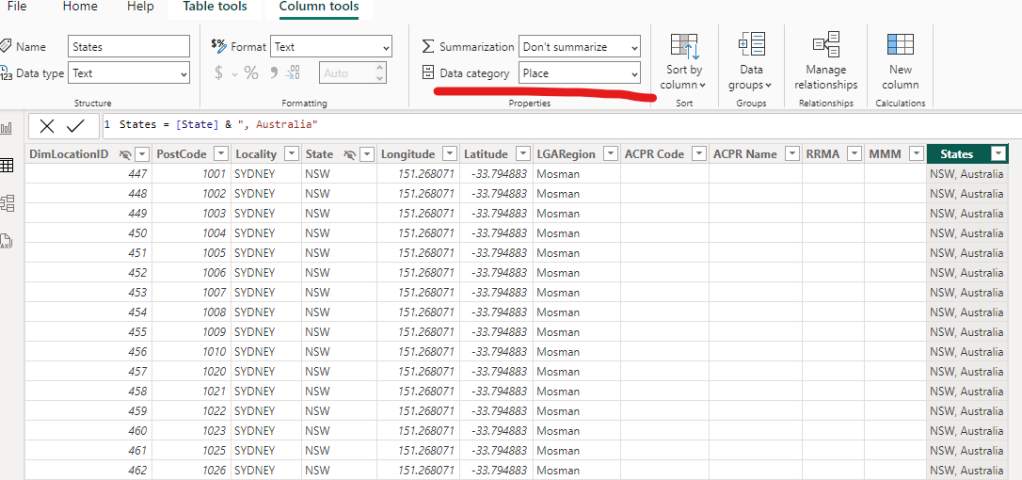

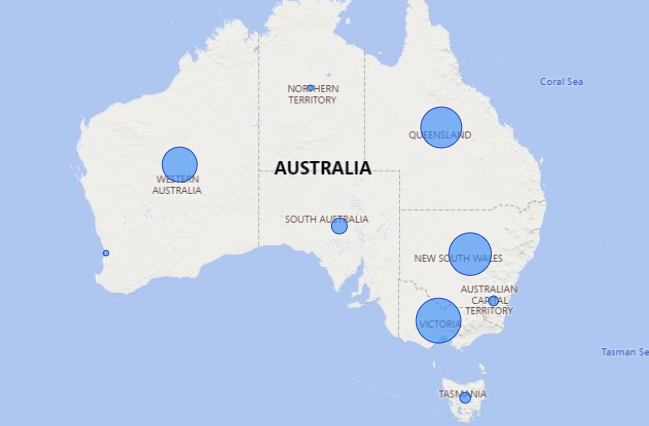

You have location data in your dataset, but when Power BI displays it on a map, it gets it all wrong. This looks especially bad, for some reason, when using Q and A. In the image below you’ll see that for the state WA representing “Western Australia”, Power BI actually plots this to WA in the USA ( Washington State ).

Now you can try and update the data field to include the country name, ( ie. “WA, Australia ), and that solves it some cases but not for my report. What I had to ALSO do was also go to the Table View, select the column ( in this case my new column with the country name – the old one was hidden ) and at the top, change the Data Category to “Place”.

After doing that, it works !

Categories: Power BI

Azure Data Factory – Invalid Template, The ‘runAfter’ property of template action contains non-existent action

November 7, 2023

Leave a comment

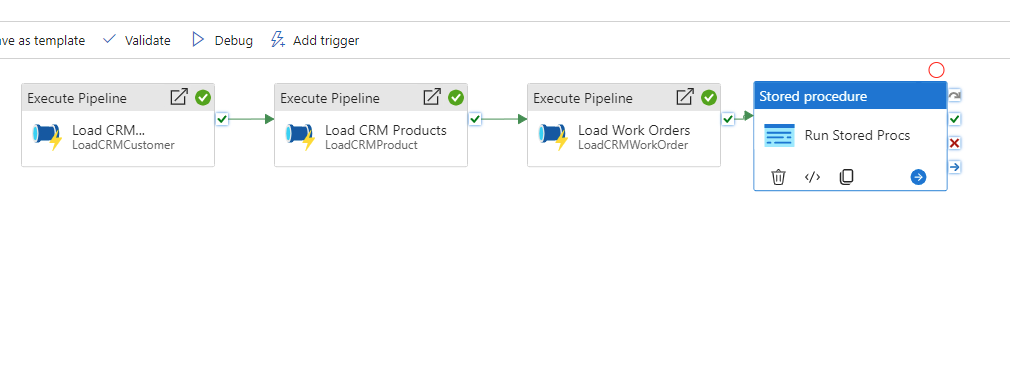

Quick post. Some of you might be building ADF pipelines and getting an error like the above. You won’t believe how simple this one is. Lets say your pipeline looks like the below :

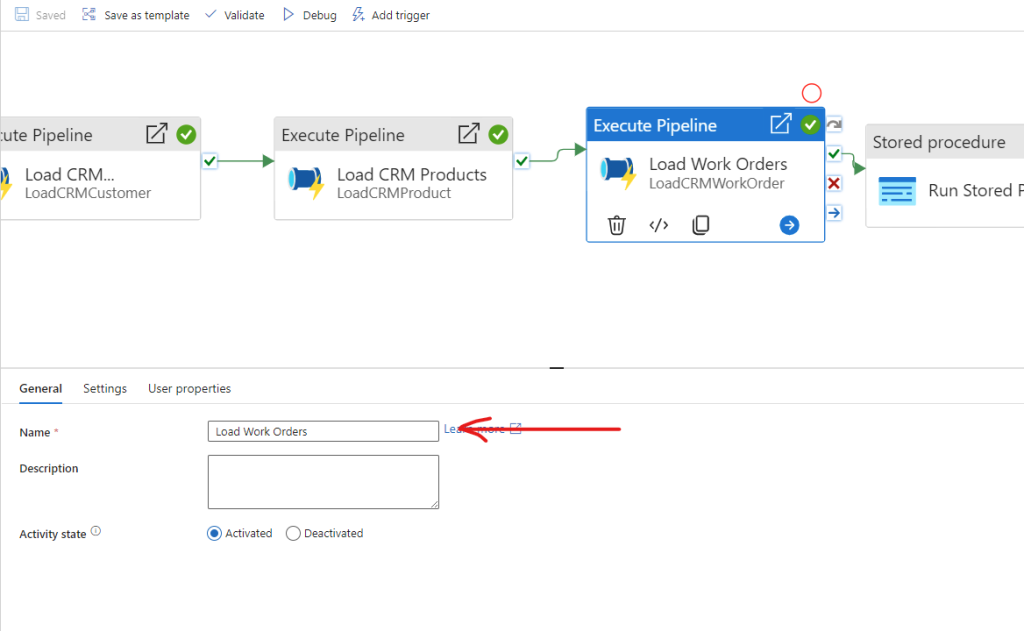

The Pipeline is not running and complaining about the ‘runAfter’ property. Sometimes this happens if the Name of the previous block has a space after it. So in the above, we go to the properties of Block 3, “Load Work Orders”

Just make sure there isn’t a space after the name.

Categories: Azure

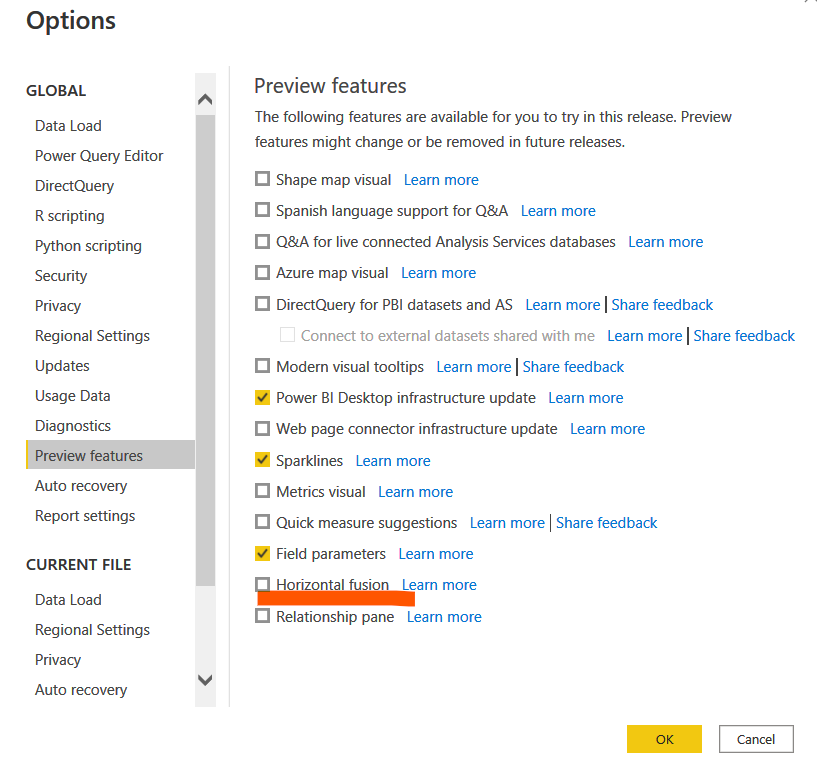

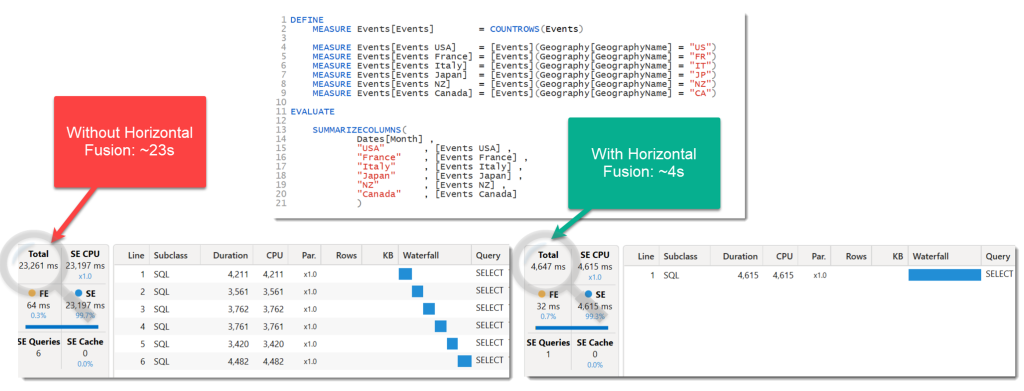

Power BI “Horizontal Fusion”

September 28, 2022

Leave a comment

It’s been awhile since we had a snazzy tech term which promises amazing performance gains… (remember “Heckaton” and “Vertipaq”…),

…but here’s something to bring back the old glory days….the impressively named “Horizontal Fusion“.

Now this one seems to be an engine improvement in Power BI, specifically as to how it creates DAX queries against your source, and with noticeable performance improvements especially in DirectQuery mode. The best part is that you enable it and it works in the background.

Once that’s done you should see fewer, more efficient DAX queries generated against your sources. Apparently it even works with Snowflake.

This is the type of new feature I like, one where I don’t have to do anything….

More information here : https://powerbi.microsoft.com/en-us/blog/announcing-horizontal-fusion-a-query-performance-optimization-in-power-bi-and-analysis-services/

Categories: Uncategorized

Intro to Quantum Computing

March 30, 2021

Leave a comment

I interview Kimara Naicker, PHD in Physics and researcher at the University of Natal, and we discuss the basics of Quantum Physics and Computing.

Categories: Uncategorized

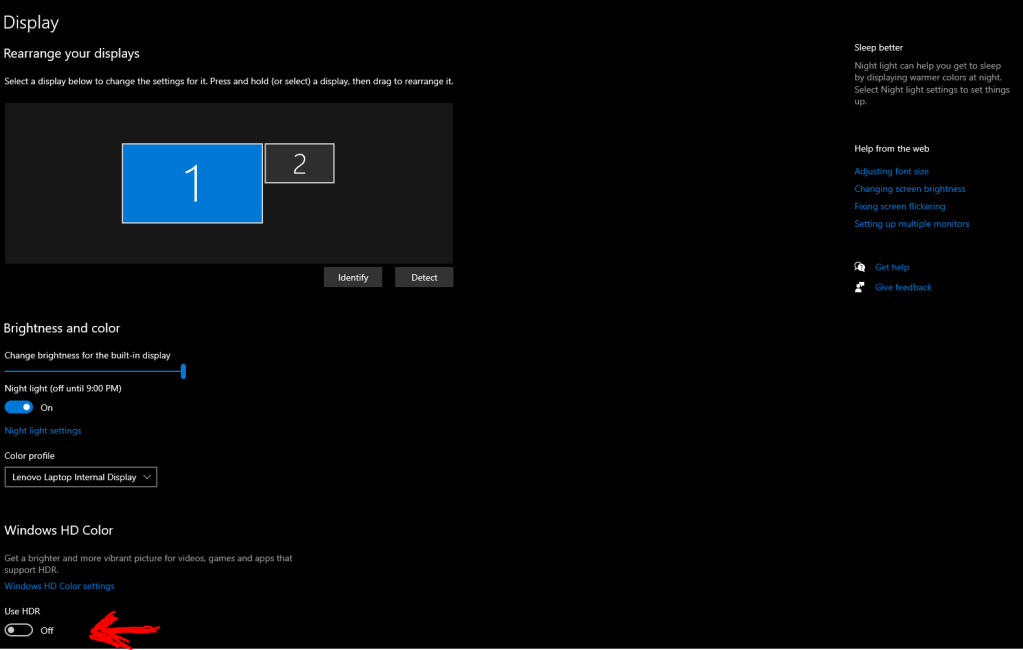

Windows 10 – cannot adjust brightness / screen dim

November 19, 2020

Leave a comment

Quick tip – I had this annoying behaviour on my main laptop where suddenly the screen was too dim and I couldn’t adjust the brightness.

There are many solutions on the internet including :

- Updating drivers

- Adjusting display settings in your power profile.

None of that worked for me. What I DID find was that on the Display screen , just under the brightness setting , you may see an option for “HDR”. For some reason this was set to “On” on my machine – simply turning it off restored the brightness to the display.

Categories: Uncategorized

Simplifying ML with AZURE

October 30, 2020

Leave a comment

Here’s the Azure ML walkthrough you’ve been waiting for. In 30 mins I take you through a broad overview with demos of the 3 main areas of Azure ML. Enjoy.

Categories: AI, Azure

AIIOT; Azure; Sphere; ML; Microsoft, Azure, Microsoft

The state of AI

September 16, 2020

Leave a comment

Recently at AI EXPO AFRICA 2020, I was interviewed by Dr Nick Bradshaw, where we had a quick chat on the current state of AI. I shared my thoughts on the progress I’ve seen over the last 2-3 years , but also some of the challenges facing us in the future. These include :

- Education – while AI will definitely have an impact on certain types of jobs in the future, corporations and governments need to make education accessible ( and cheaper ) to move people up the value chain. Countries that do this will enable their populace to enter new fields in the job market that crop up – countries that don’t will suffer tremendously. I will explore the impact of AI to jobs from an economic perspective in an upcoming article.

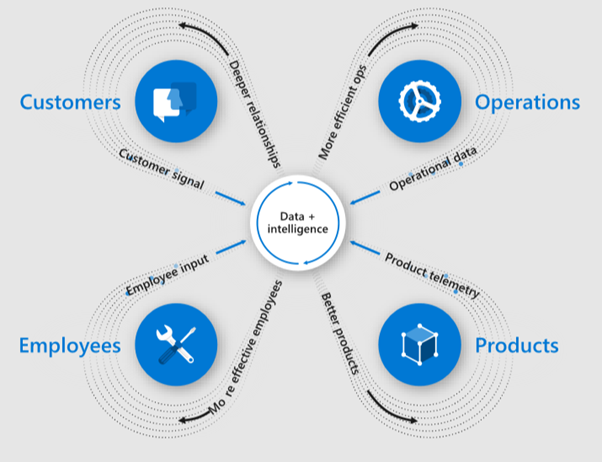

- Use cases – Until recently, technologists were struggling to find business buy in for Machine Learning use cases. While this has improved , I proposed the Microsoft “4 pilar” of digital transformation as a guideline for developing these use cases.

When you focus on problem areas , like how do we improve the customer experience, or how do we make internal operations more efficient, you will develop use cases for ML that are measureable in their value to business.

Lastly , I also mentioned that the penetration of AI will continue to happen very swiftly and transparently. I spoke of how microcontrollers / CPUs slowly spread across equipment / cars / appliances in the 20th century, bringing simple logic to those devices and improving operations. Consider when cars moved to electronic engine management systems – you now could build in simple IF-THEN-ELSE decisions into software that was running the engine , leading to more efficient operation.

Now, we see ML models being deployed everywhere , assisted by ML capable hardware ( ie. neural network engines in CPUs for example ). An example I used was my phone unlocking by seeing my face – there is a neural network chip onboard , which is running software with a trained model on it. This progress happened seamlessly, all most consumers knew was that their new model phone could do this without knowing all of the above. And so it will continue……

The video is below :

Categories: AI

AI, Artificial Intelligence, Machine Learning, ML